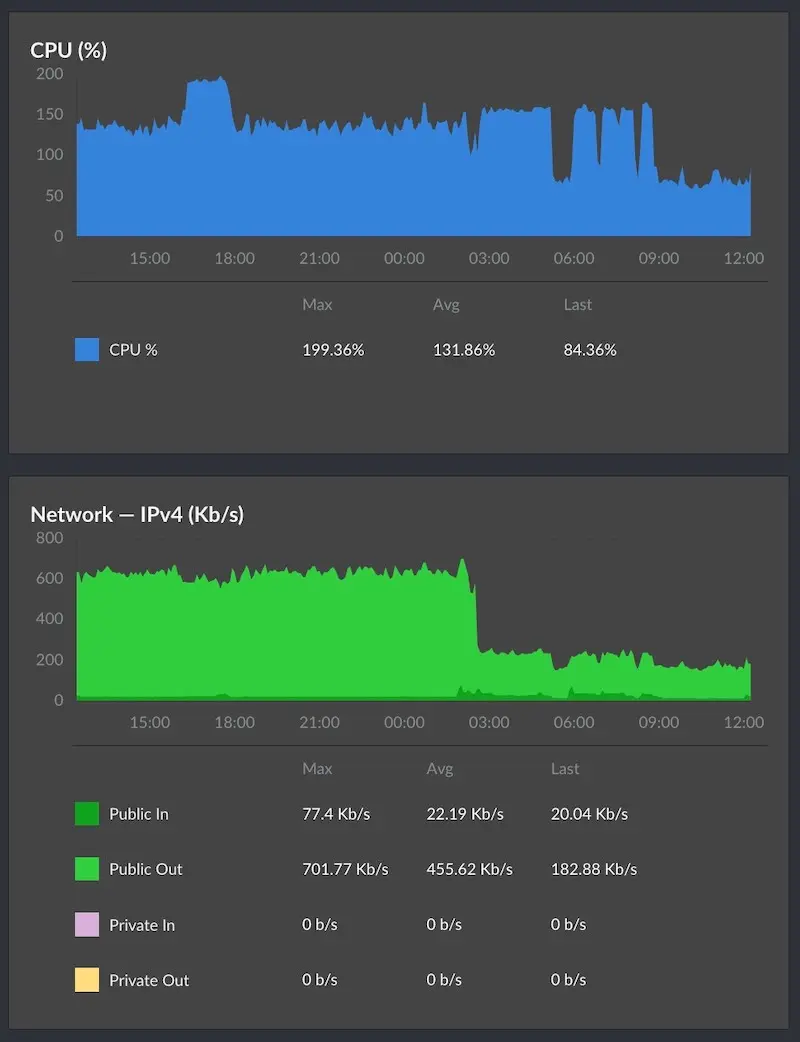

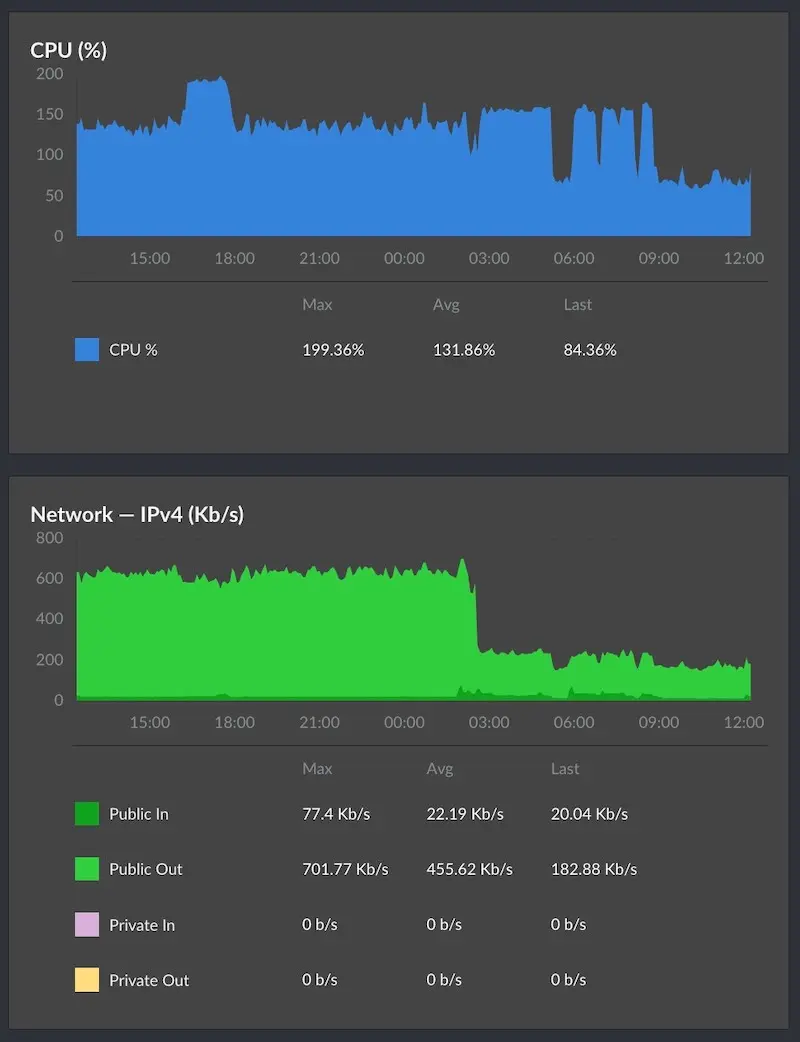

Block Spam Traffic & Spam Web Crawlers

In the era of the AI explosion, websites and servers face increasing interference from spam traffic, malicious web crawlers, and the need to block spam crawlers. These factors can pose serious threats to server resources, primarily in the following aspects:

- Consumption of Server Resources: Spam traffic and malicious web crawlers can consume server CPU, memory, bandwidth, and other resources, leading to a decrease in server performance and even crashes.

- Disruption of Normal Server Operation: Spam traffic and malicious web crawlers send a large number of invalid requests to the server, causing it to be unable to respond to legitimate user requests properly, disrupting the normal operation of websites and services.

- Security Risks: Spam traffic and malicious web crawlers may carry malicious code or viruses, posing security threats to the server.

- IP Blacklisting: Adding the IP addresses of spam traffic and malicious crawlers to a blacklist to prevent them from accessing the website.

- Behavior Analysis: Identifying and blocking spam traffic and malicious crawlers based on their behavioral characteristics.

IP Blacklisting

Regularly inspect your website's access logs, and when you identify IP addresses associated with malicious activity, you can use the "deny" directive to block them. You can block individual IP addresses or entire IP ranges. Here is an example:http {

deny x.x.x.x; # xxxx

deny x.x.x.0/24; # xxxx

}

deny directive can be configured in the Nginx configuration file within the http, server, or location modules.

It's worth noting that using an IP blacklist can be a somewhat passive approach, and with the widespread use of cloud services, many malicious crawlers constantly change their IP addresses. Therefore, the effectiveness of IP blacklisting may be limited.

Blocking specific crawlers through User Agent.

UsingUser-Agent to block specific crawlers is a common method for preventing web scrapers. The User-Agent is a field in the request header that browsers and crawlers use to identify their type.

When using User-Agent to block specific crawlers, it's essential to consider the following points:

- User-Agent can be spoofed: Some scrapers may spoof their User-Agent to bypass blocking.

- User-Agent may not be unique: Different crawlers may use the same User-Agent.

AdsBot

AhrefsBot

aiHitBot

aiohttp

Alexa Toolbar

AlphaBot

Amazonbot

ApacheBench

AskTbFXTV

Auto Spider 1.0

bingbot

BUbiNG

Bytespider

CCBot

Census

Center

CheckMarkNetwork

Cliqzbot

CoolpadWebkit

Copied

CPython

CrawlDaddy

Crawler

crawler4j

Dark

DataForSeoBot

Dataprovider

DeuSu

DigExt

Digincore

Dispatch

DnyzBot

DotBot

Download Demon

EasouSpider

EasyHttp

eright

evc-batch

ExtLinksBot

Ezooms

fasthttp

FeedDemon

Gemini

GetRight

GetWeb!

Go!Zilla

Go-Ahead-Got-It

Go-http-client

GrabNet

Hakai

HTTP_Request

HttpClient

Iframely

Indy Library

InetURL

ips-agent

jaunty

Java

JikeSpider

libwww-perl

lightDeckReports Bot

linkdexbot

LinkpadBot

LMAO

ltx71

MailChimp

Mappy

masscan

MauiBot

MegaIndex

Microsoft URL Control

MJ12bot

mstshash

muhstik

NetcraftSurveyAgent

NetTrack

newspaper

Nimbostratus-Bot

Nmap

null

package

Pcore-HTTP

PetalBot

PiplBot

PocketParser

Python-urllib

Qwantify

redback

researchscan

SafeDNSBot

scraper

SemrushBot

SeznamBot

SiteExplorer

SMTBot

SMTBot

spbot

SSH

SurdotlyBot

Swiftbot

Synapse

sysscan

Test

ToutiaoSpider

TurnitinBot

UniversalFeedParser

Wappalyzer

WinHTTP

Wotbox

XoviBot

YisouSpider

YYSpider

zgrab

ZmEu

# Disable crawling by tools like Scrapy

if ($http_user_agent ~* (Scrapy|HttpClient|PostmanRuntime|ApacheBench|Java||python-requests|Python-urllib|node-fetch)) {

return 444;

}

# Disallow access for specified User-Agents and empty User-Agents.

if ($http_user_agent ~ "DataForSeoBot|Bytespider|Amazonbot|bingbot|PetalBot|CheckMarkNetwork|Synapse|Nimbostratus-Bot|Dark|scraper|LMAO|Hakai|Gemini|Wappalyzer|masscan|crawler4j|Mappy|Center|eright|aiohttp|MauiBot|Crawler|researchscan|Dispatch|AlphaBot|Census|ips-agent|NetcraftSurveyAgent|ToutiaoSpider|EasyHttp|Iframely|sysscan|fasthttp|muhstik|DeuSu|mstshash|HTTP_Request|ExtLinksBot|package|SafeDNSBot|CPython|SiteExplorer|SSH|MegaIndex|BUbiNG|CCBot|NetTrack|Digincore|aiHitBot|SurdotlyBot|null|SemrushBot|Test|Copied|ltx71|Nmap|DotBot|AdsBot|InetURL|Pcore-HTTP|PocketParser|Wotbox|newspaper|DnyzBot|redback|PiplBot|SMTBot|WinHTTP|Auto Spider 1.0|GrabNet|TurnitinBot|Go-Ahead-Got-It|Download Demon|Go!Zilla|GetWeb!|GetRight|libwww-perl|Cliqzbot|MailChimp|SMTBot|Dataprovider|XoviBot|linkdexbot|SeznamBot|Qwantify|spbot|evc-batch|zgrab|Go-http-client|FeedDemon|JikeSpider|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|CoolpadWebkit|Java|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|YisouSpider|HttpClient|MJ12bot|EasouSpider|LinkpadBot|Ezooms|^$" ) {

return 444;

}

}

Block access based on file extension

# Blocking malicious access using file type

if ($document_uri ~* \.(swp|git|env|yaml|yml|sql|db|bak|ini|docx|doc|rar|tar|gz|zip|log)$) {

return 404;

}

. and end with suffixes like swp, git, env, etc. If such files exist, Nginx returns a 404 error to the client. Please note that the configured suffixes here are for reference and can be customized as needed. For example, if you want users to download doc files, you can remove doc|docx from the list.

Block based on keywords in the request URL

# Blocking malicious access using keywords in the URL

if ($document_uri ~* (wordpress|phpinfo|wlwmanifest|phpMyAdmin|xmlrpc)) { return 404; }

Add anti-crawling rules to the robots.txt file for the website.

User-agent: MJ12bot

User-agent: YisouSpider

User-agent: SemrushBot

User-agent: SemrushBot-SA

User-agent: SemrushBot-BA

User-agent: SemrushBot-SI

User-agent: SemrushBot-SWA

User-agent: SemrushBot-CT

User-agent: SemrushBot-BM

User-agent: SemrushBot-SEOAB

user-agent: AhrefsBot

User-agent: DotBot

User-agent: MegaIndex.ru

User-agent: ZoominfoBot

User-agent: Mail.Ru

User-agent: BLEXBot

User-agent: ExtLinksBot

User-agent: aiHitBot

User-agent: Researchscan

User-agent: DnyzBot

User-agent: spbot

User-agent: YandexBot

User-Agent: MauiBot

User-Agent: PetalBot

##### Add more as needed

Disallow: /

Summary of Configuration for dwith.com

Below is the configuration code I use on dwith.com for reference:Inside the Server block of the website

#Blocking Spam Spider

#Disable crawling by tools like Scrapy

if ($http_user_agent ~ (Scrapy|HttpClient|PostmanRuntime|ApacheBench|Java|python-requests|Python-urllib|node-fetch)) {

return 444;

}

#Disallow access for specified User-Agents and empty User-Agents.

if ($http_user_agent ~ "DataForSeoBot|Bytespider|Amazonbot|bingbot|PetalBot|CheckMarkNetwork|Synapse|Nimbostratus-Bot|Dark|scraper|LMAO|Hakai|Gemini|Wappalyzer|masscan|crawler4j|Mappy|Center|eright|aiohttp|MauiBot|Crawler|researchscan|Dispatch|AlphaBot|Census|ips-agent|NetcraftSurveyAgent|ToutiaoSpider|EasyHttp|Iframely|sysscan|fasthttp|muhstik|DeuSu|mstshash|HTTP_Request|ExtLinksBot|package|SafeDNSBot|CPython|SiteExplorer|SSH|MegaIndex|BUbiNG|CCBot|NetTrack|Digincore|aiHitBot|SurdotlyBot|null|SemrushBot|Test|Copied|ltx71|Nmap|DotBot|AdsBot|InetURL|Pcore-HTTP|PocketParser|Wotbox|newspaper|DnyzBot|redback|PiplBot|SMTBot|WinHTTP|Auto Spider 1.0|GrabNet|TurnitinBot|Go-Ahead-Got-It|Download Demon|Go!Zilla|GetWeb!|GetRight|libwww-perl|Cliqzbot|MailChimp|SMTBot|Dataprovider|XoviBot|linkdexbot|SeznamBot|Qwantify|spbot|evc-batch|zgrab|Go-http-client|FeedDemon|JikeSpider|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|CoolpadWebkit|Java|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|YisouSpider|HttpClient|MJ12bot|EasouSpider|LinkpadBot|Ezooms|^$" ) {

return 444;

}

# Block malicious access

if ($document_uri ~* \.(asp|aspx|jsp|swp|git|env|yaml|yml|sql|db|bak|ini|docx|doc|rar|tar|gz|zip|log)$) {

return 404;

}

# Filter specified keywords

if ($document_uri ~* (wordpress|phpinfo|wlwmanifest|phpMyAdmin)) {

return 444;

}

#Disallow fetching through methods other than GET, HEAD, or POST.

if ($request_method !~ ^(GET|HEAD|POST)$) {

return 444;

}

#Blocking Spam Spider end

robots.txt in the website's root directory

User-agent: MJ12bot

User-agent: YisouSpider

User-agent: SemrushBot

User-agent: SemrushBot-SA

User-agent: SemrushBot-BA

User-agent: SemrushBot-SI

User-agent: SemrushBot-SWA

User-agent: SemrushBot-CT

User-agent: SemrushBot-BM

User-agent: SemrushBot-SEOAB

user-agent: AhrefsBot

User-agent: DotBot

User-agent: MegaIndex.ru

User-agent: ZoominfoBot

User-agent: Mail.Ru

User-agent: BLEXBot

User-agent: ExtLinksBot

User-agent: aiHitBot

User-agent: Researchscan

User-agent: DnyzBot

User-agent: spbot

User-agent: YandexBot

User-Agent: MauiBot

User-Agent: PetalBot

Disallow: /

User-agent: Googlebot

Disallow: /wp-includes/

Disallow: /cgi-bin/

Disallow: /wp-content/plugins/

Disallow: /wp-content/themes/

Disallow: /wp-content/cache/

Disallow: /author/

Disallow: /trackback/

Disallow: /feed/

Disallow: /comments/

Disallow: /search/

sitemap: https://dwith.com/wp-sitemap.xml

Comments

Post a Comment